1. Introduction

This document presents the Conscious Effort Project, a next-generation artificial intelligence ensemble system designed to simulate metacognition, including temporal awareness, self-reflective thought, and multi-modal output.

1.1 Project Overview

By integrating concepts from neuroscience, cognitive science, and machine learning, the Conscious Effort Project aims to create functional simulation of metacognition with the goal of giving computers, and eventually robots, a mind of their own.

Real-time interactive data such as camera, voice, and text are processed in a self-expanding system of neural networks.

The project is a work in progress, and this document will be updated as the project progresses. (Last updated: 5/31/2025)

2. Key Objectives

The Conscious Model represents an attempt to simulate cognition in artificial intelligence. Key objectives include:

- Temporal Awareness

- Understanding not just individual states but how they evolve over time, internalizing the passage of time. Essential for building a coherent world model.

- Self-awareness

- The ability to model itself within the environment, allowing for introspection and improvement.

- Advanced Reasoning Capabilities

- Implementing logical and causal reasoning based on multi-modal inputs.

- Human-like Interaction

- Expressive, natural conversations with the eventual goal of being able to display anything the AI can imagine.

- Online Learning

- The ability to learn from its interactions and observations, improving understanding of the world over time.

3. Theoretical Foundations

3.1 Neurological Inspirations

3.1.1 The Hippocampus

The hippocampus serves as a key inspiration for the Conscious Architecture's Cognitive System:

- Theta Waves

- Simulated to support cognitive functions like memory encoding and retrieval.

- Hippocampal Clock

- Maintains consistent processing of the entire network, allowing sequential processing of sensory input.

- Phase Precession

- Replicated to adapt firing patterns based on incoming sequential data, enhancing temporal predictions and memory.

3.1.2 Sensory Networks

The model mimics parallel phased sensory systems of the human brain:

- Parallel Phased Subnets

- Process one sensory input stream in parallel, with different phases capturing various aspects of the input.

- Persistent Output Integration

- Persistent signals from different sensory streams are continuously fed into higher processing layers.

3.1.3 Neuroplasticity

Similar to Google's GShard, Referential Channels are implemented through dynamic generation of self-modifying standardized-parameter deep neural networks in parallel, allowing learning and adaptation over time.

3.2 Cognitive Science Concepts

3.2.1 Global Workspace Theory (GWT)

Based on Bernard Baars' theory, the model centralizes processed data streams in a global workspace:

- Information Distribution

- Processed information enters a central system for global awareness.

- Integration Across Subsystems

- Information passes through a centralized workspace connecting sub-processing systems.

3.2.2 Temporal Consciousness Theory (TCT)

A deeper understanding of one's own thoughts sequentially through time is critical to the model's simulation of consciousness.

Cogitavi, ergo sum

Or, simply: I thought, therefore I am.

I've long speculated that the present awareness of sequential thought is the very definition of consciousness.

The Conscious Effort Model is designed to simulate this very process in a computational system.

3.3 S.P.I.R.I.T. Framework

Joscha Bach outlined the best acronym representing cognition I've ever seen:

S

Self

P

Perpetuating

I

Intelligent

R

Recurrent

I

Information

T

Transformer

4. System Architecture

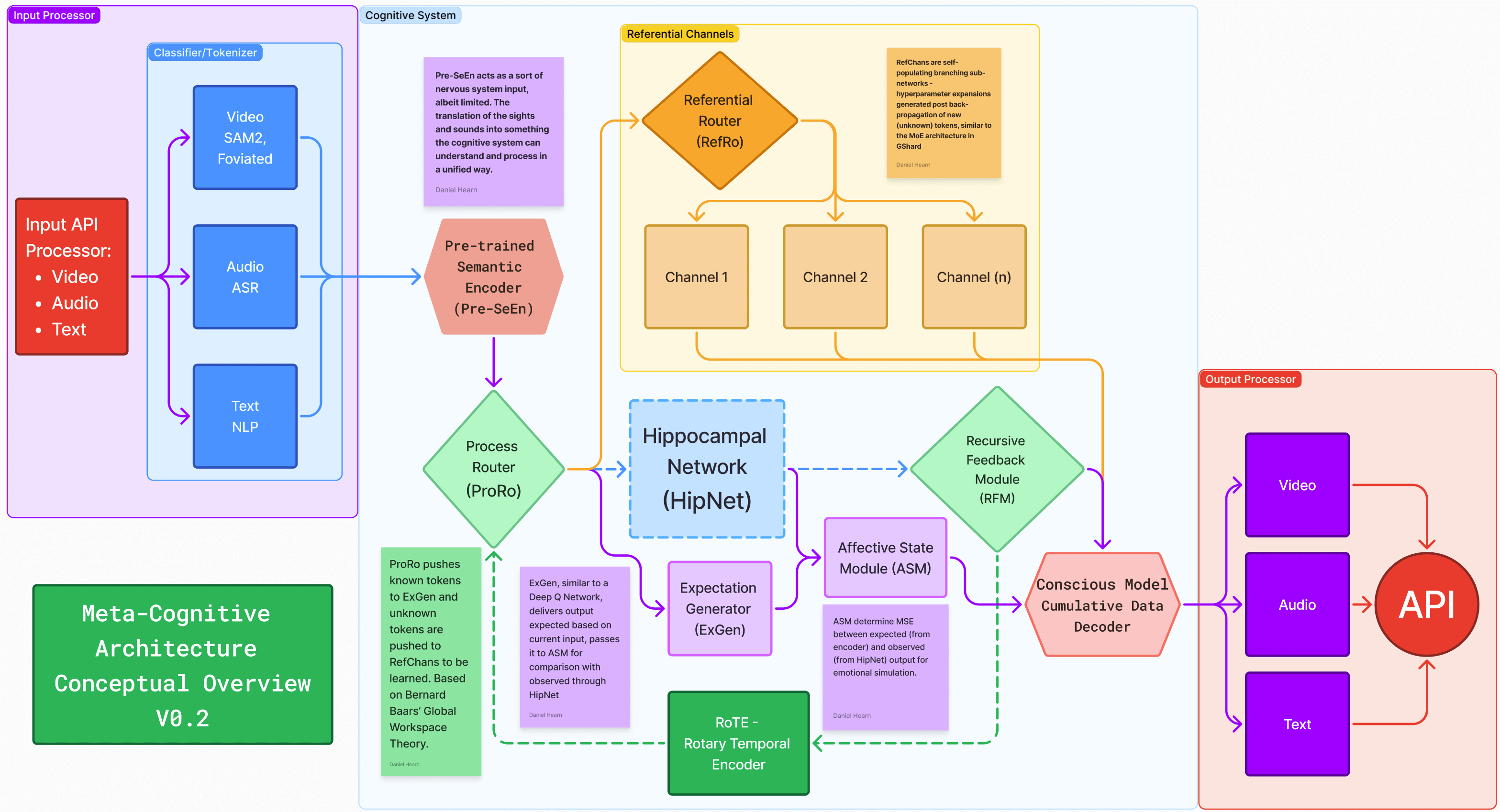

4.1 Overview

The Conscious Effort architecture is built on a modular, ensemble-based approach. It moves beyond traditional transformer architectures by introducing a recurrent cognitive core that simulates the rhythmic processing of the human brain. By decoupling sensory input from internal "thought" cycles, the system can maintain a persistent internal state, allowing it to reflect on its own processing even in the absence of external stimuli.

Each module within the system is designed to perform a specific cognitive function, coordinated by the **Hippocampal Clock**, which ensures synchrony across the multi-modal distribution.

4.2 System Components Overview

4.2.1 Input Processor API

Handles multi-modal inputs:

- Video

- Processed by a classifier using foviation.

- Audio

- Handled by a specialized audio processor (ASR-like)

- Text

- Processed by a text analysis module (GPT-like)

The outputs are fed into a Classifier/Tokenizer to normalize the data and then into a Pre-trained Semantic Encoder (Pre-SeEn).

4.2.2 Cognitive System

The core of the architecture, consisting of these components:

- Process Router (ProRo)

- Referential Channels (RefChans)

- Hippocampal Network (HipNet)

- Rotary Temporal Encoder (RoTE)

- Expectation Generator (ExGen)

- Affective State Module (ASM)

- Recursive Feedback Module (RFM)

- Conscious Model Cumulative Data Decoder

4.2.3 Output Processor API

Prepares the system's responses:

- Specialized Output Modules

- For video, audio, and text

- Output API

- Final interface for system responses

4.3 Detailed Component Analysis

4.3.1 Process Router (ProRo)

Architecture: Multi-head attention mechanism with gating networks

Processing:

- Token Classification

- Categorizes tokens as "known" or "unknown"

- Attention Distribution

- Computes attention weights for each token across multiple heads

- Routing Decision

- Uses a gating network to determine token routing

Outputs:

- Known tokens

- Routed to ExGen and Referential Channels

- Unknown tokens

- Routed to Referential Channels for learning

4.3.2 Referential Channels (RefChans)

Self-populating standardized-hyperparameter deep neural network modules

Key Components:

- Referential Router (RefRo)

- Referential Channel Networks

- Referential Channel Expansion Module

Processing:

- Token Embedding

- Converts tokens to vector representations

- Information Integration

- Combines information from multiple sources

- Channel Update

- Updates channel parameters based on new information

4.3.3 Hippocampal Network (HipNet)

Architecture: Recurrent neural network with multiple sub-networks

Key Components:

- Theta Oscillator

- Place Cells

- Time Cells

- Pattern Separator

- Pattern Completer

Processing:

- Temporal Encoding

- Encodes temporal information in neural patterns

- Spatial Encoding

- Encodes spatial relationships and context

- Memory Formation

- Creates new memory representations

- Memory Recall

- Retrieves and reconstructs stored memories

4.3.4 Rotary Temporal Encoder (RoTE)

Architecture: Sinusoidal encoding mechanism with learnable frequency parameters

Key Features:

- Continuous Time Representation

- Provides smooth temporal encoding without discretization

- Multi-scale Encoding

- Captures temporal patterns at multiple time scales

- Relative Position Encoding

- Encodes relative temporal positions between events

Processing:

- Time Discretization

- Converts continuous time to discrete intervals

- Sinusoidal Encoding

- Applies sinusoidal transformations to temporal data

- Concatenation

- Combines multiple temporal encodings

4.3.5 Expectation Generator (ExGen)

Architecture: Transformer-based sequence-to-sequence model

Key Components:

- Multi-head Self-attention Mechanism

- Feed-forward Neural Network

Processing:

- Temporal Sequence Modeling

- Models temporal dependencies in input sequences

- Prediction Generation

- Generates predictions for future states

4.3.6 Affective State Module (ASM)

Architecture: Multi-layer perceptron with specialized affect computation layers

Key Features:

- Emotion Representation

- Represents emotional states in vector space

- Affect Prediction

- Predicts emotional responses to stimuli

Processing:

- Affective Loss Calculation

- Computes loss based on emotional consistency

- Reward Assignment

- Assigns rewards based on affective states

4.4 Implementation Status

The Conscious Effort Project is currently navigating the transition from theoretical modeling to active component prototyping. Our development is organized into several concurrent workstreams:

- ProRo & RefChans (Alpha Phase): We have successfully implemented initial prototypes of the Process Router and Referential Channels. Current benchmarks focus on routing accuracy between known and unknown token spaces.

- HipNet Prototype: Integration of the Theta Oscillator with basic RNN structures to simulate sequential "thought loops" is currently in early-stage testing.

- Multi-modal Pipeline: The Input/Output API wrappers are established, though deep integration with the semantic encoder remains a primary development objective for 2025.

Early testing indicates that the "Conscious Model" strategy effectively reduces hallucination by requiring the ASM (Affective State Module) to validate output against internal consistency checks before final decoding.

5. Applications

Robotics

A generalized robot brain capable of reasoning in uncertain environments, giving humans a long arm into space as we expand.

Research

An advanced research tool for unlocking mysteries in all fields of discovery.

Natural Language Processing

Conversational partners, translation systems, and applications with a deep understanding of context over time.

Healthcare

Real-time healthcare solutions, including early and accurate diagnosis, personalized treatment plans, and remote patient monitoring.

Education

Personalized tutoring systems that can adapt to the unique learning styles and needs of each student.

Business

Predictive analytics, scenario planning, and decision-making assistance in a wide range of industries.

Manufacturing

Predictive maintenance, supply chain optimization, and production process improvement.

6. Future Work

As the project evolves, we are focusing on bridging the gap between digital cognition and physical agency, ensuring that as these systems become more capable, they remain safe and aligned with human values.

- Hardware-Level Guardrails: Moving beyond software-based constraints to implement physical, non-bypassable limitations on system agency to ensure inherent safety in robotic applications.

- Scalable Metacognition: Researching ways to expand the Referential Channel network using distributed GPU clusters without losing the sub-second response times required for natural interaction.

- Physical Embodiment: Integrating the input/output processors with low-latency robotic systems to test spatial awareness in real-world environments.

- Deep Temporal Understanding: Enhancing the RoTE (Rotary Temporal Encoder) to support multi-year long-term memory retrieval without the "forgetting" issues common in standard transformer models.

7. Conclusion

The Conscious Effort Model has been a frustrating exercise in patience. Trying to understand the concept of consciousness is a Sisyphean task, and simulation seems impossible.

With the ability to reflect, learn, predict, and output reactions to inputs in a multi-modal fashion, the Conscious Effort Model brings us one step closer to true cognitive AI.

Humanity will populate the stars. But we need help.

8. References

The Conscious Effort Project draws upon a wide array of foundational research in both computer science and cognitive neuroscience.

- Baars, B. J. (1988). A Cognitive Theory of Consciousness. Cambridge University Press.

- Bach, J. (2019). Principles of Synthetic Intelligence. Oxford University Press.

- Tononi, G. (2004). An Information Integration Theory of Consciousness. BMC Neuroscience.

- Vaswani, A., et al. (2017). Attention Is All You Need. NIPS.

- Dehaene, S. (2014). Consciousness and the Brain: Deciphering How the Brain Codes Our Thoughts. Viking.

9. Glossary

- Metacognition

- The awareness and understanding of one's own thought processes.

- Temporal Awareness

- The ability to perceive and understand the passage of time and temporal relationships.

- Self-awareness

- The capacity for introspection and the ability to recognize oneself as an individual separate from the environment.

- Multi-modal

- Involving multiple types of input or output (e.g., visual, auditory, textual).

- Neural Network

- A computational model inspired by biological neural networks, consisting of interconnected nodes.

- Transformer

- A deep learning architecture that uses self-attention mechanisms to process sequential data.

- Attention Mechanism

- A technique that allows models to focus on specific parts of input data when making predictions.

- Backpropagation

- An algorithm for calculating gradients in neural networks, used for training.

- Loss Function

- A function that measures the difference between predicted and actual outputs, used to optimize model performance.

- Embedding

- A learned representation of data in a lower-dimensional space that preserves semantic relationships.